There are several popular patterns for creating a replicated set of AMPS instances. One popular pattern is a “cascading mesh”, or a set of instances that receives publishes in one set of instances and then distributes those messages to other sets of instances. This blog post describes a common approach to creating a mesh of this type, explains how the configuration works, and discusses the tradeoffs involved in this approach.

There are several popular patterns for creating a replicated set of AMPS instances. One popular pattern is a “cascading mesh”, or a set of instances that receives publishes in one set of instances and then distributes those messages to other sets of instances. This blog post describes a common approach to creating a mesh of this type, explains how the configuration works, and discusses the tradeoffs involved in this approach.

Cascading Mesh Topology

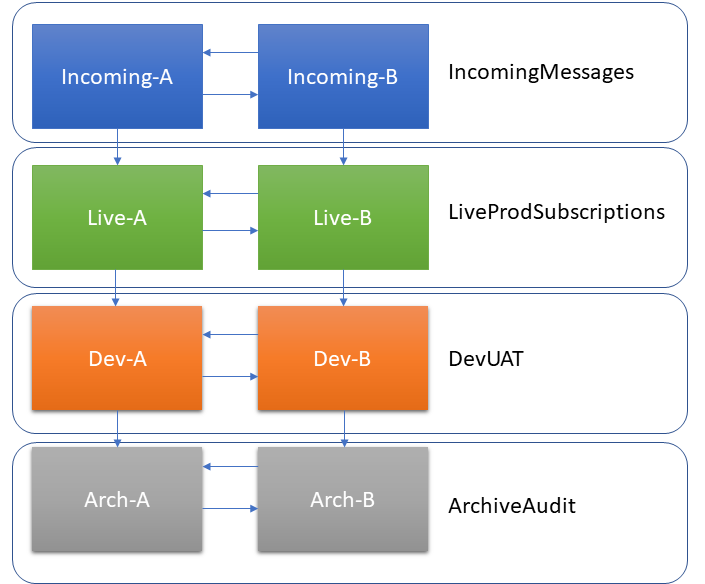

The sketch below shows the topology of the cascading mesh.

The first tier of the mesh has two instances that receive publishes from applications. These instances are part of the “IncomingMessages” group, and replicate both to each other and to the next tier in the mesh.

The second tier of the mesh provides services to subscribers. This tier has two instances that are part of the “LiveProdSubscriptions” group. These instances replicate to each other and also the next tier in the mesh.

The third tier of the mesh provides a non-production environment for application development and testing. This tier has two instances that are part of the “DevUAT” group. These instances replicate to each other and to the last tier in the mesh.

The final tier in the mesh provides an environment for archival and audit. This tier has two instances that are part of the “ArchiveAudit” group. These instances replicate to each other, but do not replicate anywhere else.

This topology is designed to meet the following requirements:

-

All messages are available on all tiers.

-

The mesh can survive the loss of a server on any tier (and, in fact, could survive the loss of one server on each tier) and still deliver messages.

-

Different types of usage are isolated to different instances. Only approved, production-ready applications will be allowed to access the “LiveProdSubscriptions” instances.

An archive of the configuration for these instances is available for download.

AMPS Replication

For a basic introduction to AMPS replication, see From Zero to Fault-Tolerance Hero and the AMPS User Guide section on High Availability.

For the purposes of creating a cascading mesh, the following aspects of AMPS replication are most important:

-

AMPS replication is always point-to-point, from an originating instance to a receiving instance.

-

By default, AMPS replication does not replicate messages that arrive over a replication connection. An instance in a mesh uses the

PassThroughdirective to further replicate messages that arrive at the instance via replication. This isn’t necessary if there are only two instances in use, but is typically required for a multi-instance installation to replicate correctly.PassThroughin a givenDestinationspecifies that when a message is received via replication from an instance in a matchingGroup, it is eligible to be replicated by this instance to thisDestination. If noPassThroughis present in theDestinationconfiguration, replicated messages will not be further replicated by this instance. Notice that thePassThroughsetting applies to theGroupname of the instance the message is received from, and not any other instance that the message may have been replicated through. -

An outgoing message stream from an originating instance is specified by adding a

Replication/Destinationto the configuration of the outgoing instance, one per outgoing message stream. -

AMPS duplicate detection uses the name of the publisher and the sequence number assigned by the publisher. If the same message is received more than once (for example, over different replication paths), only the first copy received is written to the transaction log. Any other copy will be discarded. Notice that, if there are multiple paths to an instance, there is no guarantee as to which path the first message will take. This is especially important in a failover situation or if there is network congestion, but can also be true even when the network, hardware, and AMPS instances are all performing as expected.

-

AMPS replication validation, by default, ensures that both sides of a replication connection provide a complete replica of the messages being replicated. Since this topology intentionally does not replicate messages from the lower-priority environments to the higher priority environments, the configuration will need to relax some of the validation rules.

The diagram above shows one possible arrangement of replication connections for this mesh. To make this application more resilient, each instance in a given tier will be configured to fail over replication to either of the instances in the next tier.

Determining the Outgoing Destinations

To be sure that every message reaches every instance in the mesh, each instance in the mesh replicates to the other instance at the same tier.

Each instance also insures that messages are

replicated to the next tier. Since the instances

in the next tier will also replicate to each other,

the configuration uses a single Destination

with the addresses of both the instances in the

next tier as failover equivalents. Once a message

reaches an instance in the next tier, that instance

will be sure that the message is replicated to the

other server in the tier.

Determining the PassThrough Configuration

For a mesh like this, where the intent is for each message to reach every instance in the mesh, it’s important that each instance pass through messages from every upstream instance.

Since the intent is to pass through every message, the easiest

way to specify PassThrough is to provide a regular

expression that matches any group. AMPS configurations,

by convention, typically use the regular expression .*

(any quantity of any character) to match anything.

If it were necessary to be more explicit, though, for every instance, the configuration would pass through the group names for every instance from which it could receive an incoming message.

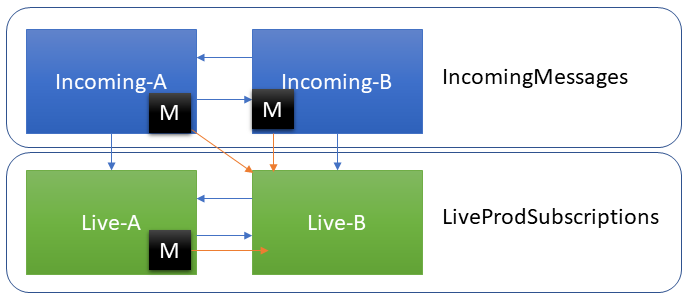

For example, as shown in the following diagram,

when a message is initially published to

Incoming-A, the Live-B instance could receive the

first copy of that message from the Incoming-B instance

in the IncomingMessages group, from the Incoming-A

instance in the IncomingMessages group, or from the

Live-A instance in the LiveProdSubscriptions group.

A PassThrough configuration for an outgoing Destination

from this instance must specify

at least IncomingMessages|LiveProdSubscriptions for the

Live-B instance to replicate the message further. For

convenience, an instance would typically specify .*

to match any incoming Group.

Likewise, the Dev-A instance could receive a replicated

message from the Live-Ainstance in the LiveProdSubscriptions

group, from the Live-B instance in the LiveProdSubscriptions

group, or from the Dev-B instance in the DevUAT group. A

PassThrough configuration for an outgoing Destination from

this instance should specify at least LiveProdSubscriptions|DevUAT.

Again, for convenience, an instance would typically specify

.* to match any incoming Group.

Sync or Async Acknowledgement?

One other choice that needs to be made for each replication destination is the type of acknowledgement to be used for that destination. The acknowledgement type controls when the instance of AMPS considers a message to be safely persisted and acknowledges that persistence.

With sync acknowledgement, an instance of AMPS will

wait for the replication destination to acknowledge

that a message has been persisted before considering

the message to be safely persisted and acknowledging

the message as persisted (to either a publisher or

an upstream replication instance).

With async acknowledgement, an instance

of AMPS will consider the message to be safely persisted

when it is persisted to the local transaction log.

The acknowledgement type doesn’t affect how quickly

AMPS replicates messages. However, because async acknowledgement

may acknowledge a message before the message has been received

by a downstream instance, it is important to be sure that

a message source (either a publisher or an upstream instance)

does not fail over between two AMPS instances that replicate over

an async connection.

For this mesh, this means that we can use async replication between

tiers, but we need to use sync replication within a tier. For the

IncomingMessages tier, a publisher may fail over between Incoming-A

and Incoming-B, so those instances must use sync acknowledgement

to replicate to each other. In the other tiers, a replication connection

may fail over between an -A instance and a -B instance, so

those connections must use sync acknowledgement.

Since a connection will not fail over from one tier to another, and since

every instance fully replicates the message stream (thanks to the

PassThrough configuration discussed in the previous section), it’s

reasonable to use async acknowledgement between the tiers. This

can reduce the storage needs for the applications that publish to

the IncomingMessages instances, since messages could potentially

be acknowledged to those publishers before the messages are replicated

to all of the downstream instances.

Validation Rules

As mentioned earlier, by default AMPS replication validation tries to ensure that messages published to a replicated topic on any instance will be delivered to all other replicated instances. In many cases, this is exactly what replication is intended to do.

This replicated mesh, though, does not replicate messages from a lower-priority environment to a higher-priority environment. Because of this, the configuration will need to relax some of the validation checks.

Between tiers (for example, replication between Live-A and either

Dev-A or Dev-B), messages are only replicated in one direction.

The replicate validation check ensures that topics that are

replicated to a given instance are also replicated from a given

instance. To allow messages to be replicated in only one direction, the

configuration excludes the replicate validation check.

Within a tier (for example, replication between Live-A and

Live-B), every message will be fully replicated, so there is

no need to disable the replicate validation check within a tier.

However, the cascade validation check ensures that every destination

that messages are replicated to enforces the same replication

checks to its own destinations. This means that, within a tier,

the configurations need to exclude the cascade validation check.

Because the configurations exclude the cascade validation check

within a tier, this means that the tier that replicates to each

tier must also exclude the cascade validation check.

So, for each tier, we need to relax replication validation as follows:

-

Within a tier, the configurations will exclude the

cascadevalidation check. -

Between tiers, the configurations will exclude the

replicateandcascadevalidation checks.

Putting The Plan Together

To summarize the steps we go through to put the plan together, we have the following steps for creating a cascading mesh:

-

Sketch out the mesh:

-

Each instance should replicate to every other instance in the same tier.

-

Each instance should have an outgoing connection that can fail over to any of the instances on the next tier.

-

-

For every instance, ensure that the passthrough configuration is either

.*, or includes the Group name for every incoming replication connection. -

If there are any replication connections where it is not possible for either a publisher or a replication connection to fail over from one side of the connection to the other side of the connection (in this example, connections between tiers), consider whether using

asyncacknowledgement might reduce the resource needs for publishers by providing acknowledgement more quickly.

For example, the Replication section of the Incoming-A instance

would be as follows in the example configuration:

<Replication>

<Destination>

<Name>Incoming-B</Name>

<Group>IncomingMessages</Group>

<PassThrough>.*</PassThrough>

<Topic>

<Name>.*</Name>

<MessageType>json</MessageType>

<ExcludeValidation>cascade</ExcludeValidation>

</Topic>

<SyncType>sync</SyncType>

<Transport>

<InetAddr>localhost:4002</InetAddr> <!-- Incoming-B -->

<Type>amps-replication</Type>

</Transport>

</Destination>

<Destination>

<Group>LiveProdSubscriptions</Group>

<PassThrough>.*</PassThrough>

<Topic>

<Name>.*</Name>

<MessageType>json</MessageType>

<ExcludeValidation>replicate,cascade</ExcludeValidation>

</Topic>

<SyncType>async</SyncType>

<Transport>

<InetAddr>localhost:4101</InetAddr> <!-- Live-A -->

<InetAddr>localhost:4102</InetAddr> <!-- Live-B -->

<Type>amps-replication</Type>

</Transport>

</Destination>

</Replication>The destination configuration for Live-A follows a similar

pattern. Notice that since the LiveProdSubscriptions group

is not intended for new messages to be published, the instance

does not specify replication back to any instance in the

IncomingMessages group.

The Replication for Live-A looks like this:

<Replication>

<Destination>

<Group>LiveProdSubscriptions</Group>

<PassThrough>.*</PassThrough>

<Topic>

<Name>.*</Name>

<MessageType>json</MessageType>

<ExcludeValidation>cascade</ExcludeValidation>

</Topic>

<SyncType>sync</SyncType>

<Transport>

<InetAddr>localhost:4102</InetAddr> <!-- Live-B -->

<Type>amps-replication</Type>

</Transport>

</Destination>

<Destination>

<Group>DevUAT</Group>

<PassThrough>.*</PassThrough>

<Topic>

<Name>.*</Name>

<MessageType>json</MessageType>

<ExcludeValidation>replicate,cascade</ExcludeValidation>

</Topic>

<SyncType>async</SyncType>

<Transport>

<InetAddr>localhost:4201</InetAddr> <!-- Dev-A -->

<InetAddr>localhost:4202</InetAddr> <!-- Dev-B -->

<Type>amps-replication</Type>

</Transport>

</Destination>

</Replication>Try it Yourself

An archive of the configuration for these instances is available for download. These configuration files are set up to run on a single system, but can easily be adapated to a multi-server configuration (by adding the host name for each instance to the replication InetAddr).